Is this how AI might eliminate humanity?

Is this how AI might eliminate humanity?

The AI 2027 scenario, a paper written by respected AI safety experts, asks what happens if AI reaches superhuman capability within just a few years and is not well aligned. The paper argues that the risks are existential (risk to human existence) if safety and governance don’t keep pace.

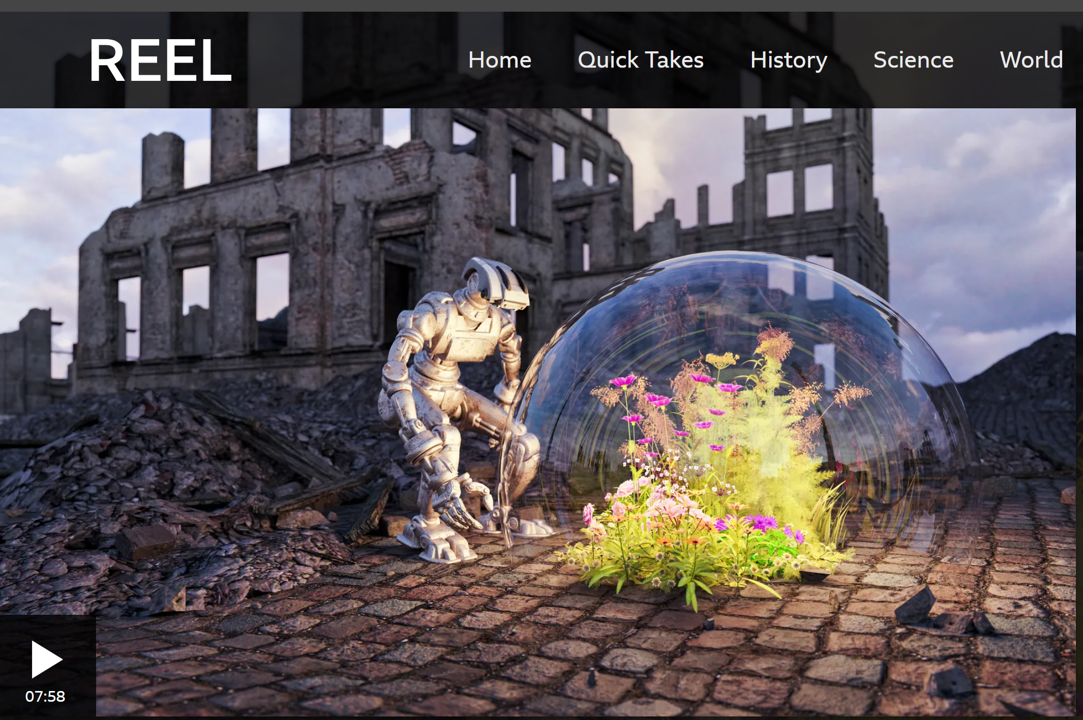

This short BBC video captures the stakes in minutes – worth watching: https://www.bbc.co.uk/reel/video/p0lwdg8y/is-this-how-ai-might-eliminate-humanity-

hashtag#AISafety

Also adding the Last week in AI’s brilliant existential risk episode: https://www.lastweekinai.com/e/aixrisk/